Vega protocol: Fair access to efficient & resilient derivatives markets

by Felix Machart, May 24, 2021

Having been backers of Vega since the Seed round (check out our previous post), we have been incredibly impressed with the advancements of their tech stack as well as the community they have been gathering along the way.

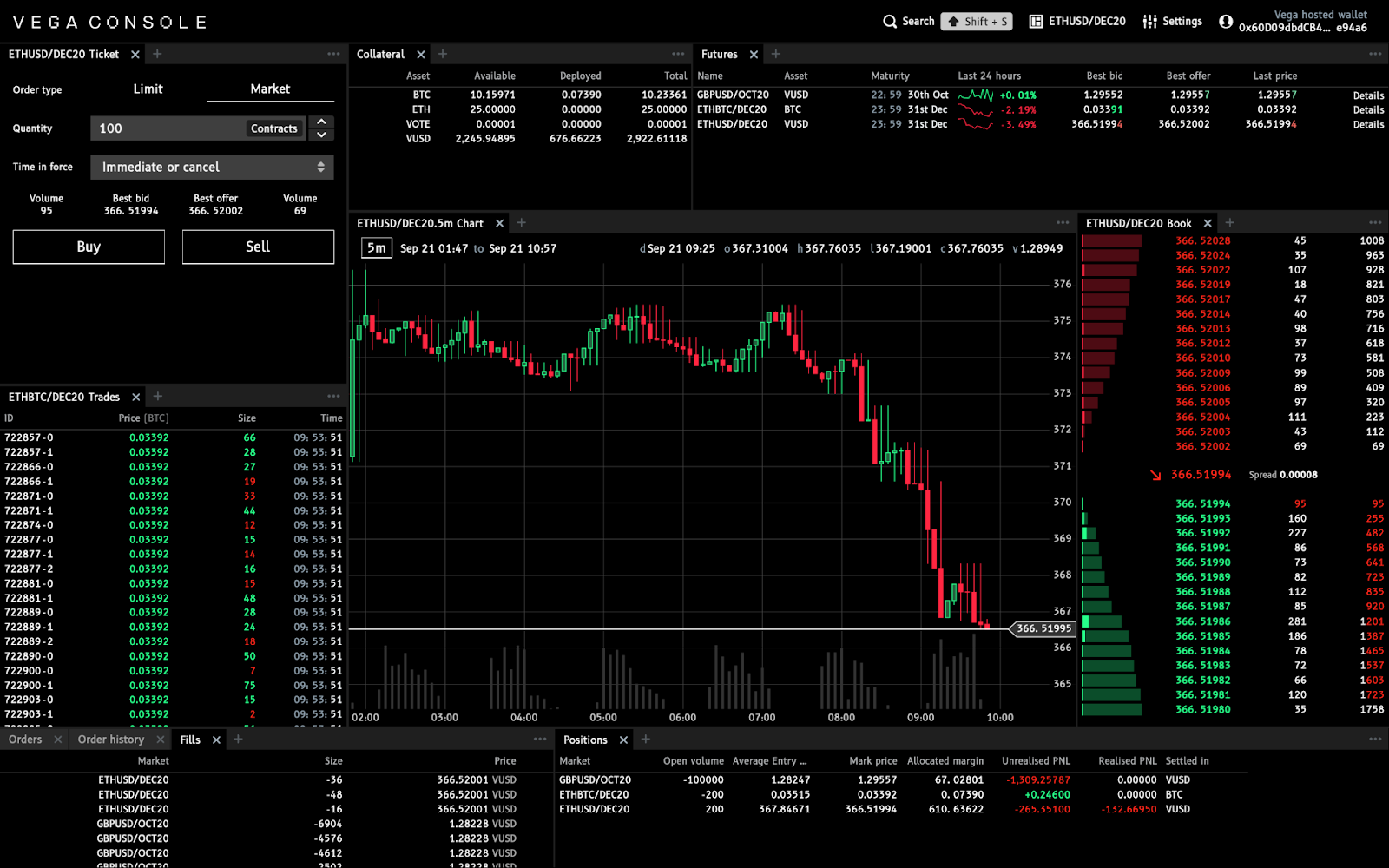

Vega is a decentralized derivatives trading protocol with continuous limit order books or mechanisms such as frequent-batch auctions, constructed as a special purpose layer 1 blockchain, with cross-chain collateral deposits.

It can be seen as a decentralized CCP (central counterparty), in that it is the network that holds collateral/margin, maintains insurance funds, applies risk management and settles trades. The network automatically enforces rules around market safety in a trust-minimized way. The outcome is a system that vastly outperforms traditional markets in metrics of cost, market access, fairness, transparency, data access and speed of trade lifecycle.

Decentralization and permissionless access promote resilience and innovation through avoiding single points of failure and enabling a global audience to build on top of protocols. They counteract monopolization by creating a competitive market for node/server infrastructure as well as liquidity provision, while remaining a single logical unit with coherent risk management. This should ultimately lead to lower costs, higher security and better user experience.

Anyone with an internet connection will get efficient and fair access to fine-grained risk-hedging and exposure instruments with professional-grade tooling.

In this post, I would like to highlight some problems that plague traditional markets as well as today’s crypto counterparts, for which Vega has especially interesting solutions.

Rent extraction by privileged parties & low quality liquidity

In traditional financial markets there has been a trend towards high-frequency trading (HFT), the practice of exploiting speed advantages on the order of milliseconds, which is estimated to make up around half of all trading volume. Proponents argue that the increased liquidity allows for more efficient trading for regular market participants.

While liquidity provision in general is useful, latency arbitrage, the practice of sniping stale orders on an order book is harmful.

In order to understand why, consider the race from a liquidity provider’s (LP’s) perspective. Suppose there is a publicly observable news event that causes the quote of an LP to become stale (not representing the available information anymore). While the LP will try to adjust the stale quotes, many other market participants will try to pick stale quotes. In a continuous limit order book (CLOB), messages are processed one after the other in a serial fashion. Even if all participants are equally fast, the LP will likely lose out against the many on average. As a result of the zero-sum speed race the LPs activity becomes more costly, which ultimately leads to higher costs for the regular investor, whom LPs are supposed to be servicing.

A recent study by the UK Financial Conduct Authority (FCA) finds that eliminating such latency arbitrage would reduce the cost of trading by 17% and that the total sums at stake are on the order of $5 billion annually in global equity markets alone.

Such arbitrage opportunities have led to a socially wasteful arms race in acquiring slight speed advantages over competitors, while not actually contributing to incorporating new fundamental information into the market (which would contribute to efficiency). One prominent example is the $300M that has been spent for reducing latency by 3ms from New York to Chicago with a straight line vs. zig-zag fibre-optic cable (Spread Network).

Exchanges are able to exploit their market power to earn significant revenues from the sale of co-location services and proprietary data feeds of an estimated $1B in 2015 or on the order of five times of regular hour trading fees for equities exchanges (BATS, NYSE & NASDAQ). Large derivatives exchange CME made close to half a billion for providing the service of co-location and data fees, while being able to charge significantly more in trading fees due to proprietary derivatives products, making $2.8B in the same time frame (Budish et. al). In 2020 CME made $3.9B from trading fees, $550M from data fees and $440M from other sources which includes co-location services (CME filing).

Propensity to flash-crashes

Also, HFT can lead to sudden price moves (flash-crashes) such as in equities markets in 2010 or in crypto last March. While being controversially debated as being the root-cause, it is relatively clear that it at least contributed to such due to its exacerbating effects. As volatility spikes, many speed traders turn off their machines, accelerating a selloff by drying up liquidity, with fewer of them willing to buy cascading sell orders triggered by falling prices. This exemplifies how such liquidity is of low quality (“ghost liquidity”), being available at one second, while vanishing at the next, exactly at the point when it is needed most.

Frequent batch-auctions to the rescue

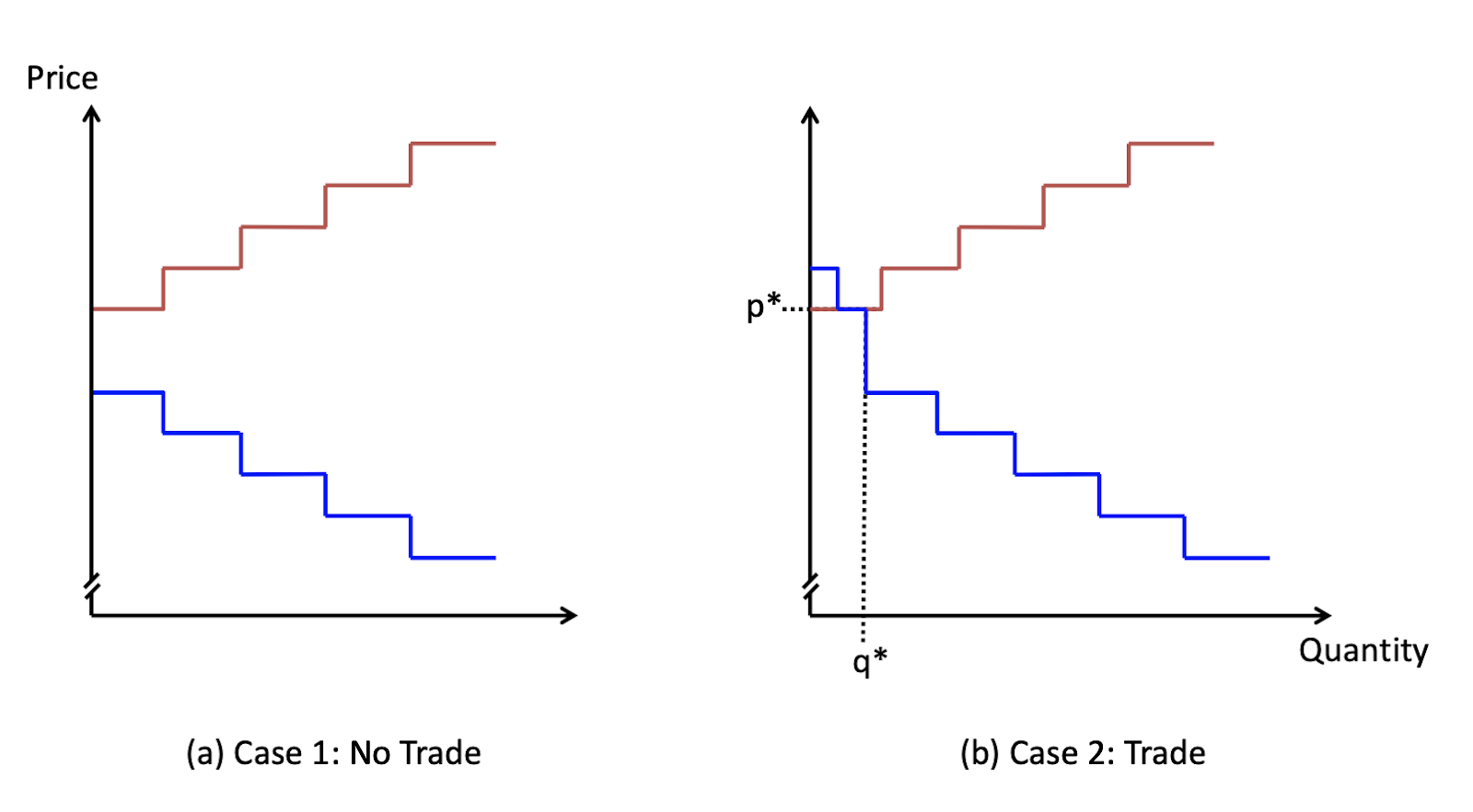

It has been suggested that frequent batch-auctions could avoid the negative effects of HFT. Orders would be collected and settled against each other e.g. every tenth second, which would not make any significant difference in user experience for parties that want to manage risk or get exposure to an asset.

The two reasons why batch-auctions eliminate the wasteful and socially harmful arms-race is that they eliminate tiny speed advantages (if trades are settled every second or tenth second, nano-seconds do not matter) and it shifts competition from speed to competition for price.

However, existing exchanges do not have sufficient incentives to adopt such new market designs precisely because they are able to extract rents from the inefficiencies that these alternatives are aiming to eliminate.

Miner extractable value (MEV) on blockchains

Blockchains and their financial applications have their own set of issues. Existing blockchains ignore the challenge of ordering transactions in a fair manner to a large extent and mostly provide explicit ways to front-run based on the fact that users can get their transaction included earlier by paying a higher gas/transaction fee than someone else in e.g. Ethereum.

Based on this, the issue of excessive miner extractable value arises (MEV, Flash-boys 2.0). MEV is the profit a miner, or other privileged protocol actor in consensus like validators in proof of stake, can make through arbitrarily including or reordering transactions from blocks they produce. Today mainly traders extract value from front-running strategies, with miners benefitting from the increased fees from gas races. However, it is likely that miners will increasingly run such strategies themselves. There are significant negative externalities caused by these possibilities in the form of clogging up the mempool and block-space as well as the resulting fee spikes. Projects such as flashbots aim at democratizing the practice and at least reduce such negative externalities, while not eliminating the root-cause.

Fairness in Vega’s protocol design and efficient market design

Definitions of (relative) fairness

Vega solves for relative fairness — ways to assure that the relative order of transactions is fair. The goal is to build a module that can be added to existing blockchain designs — “Wendy the good little fairness gadget”.

The exact definition of fairness that is required for specific applications can differ. Thus, Vega intends to provide a library of fairness pre-protocols run by validators in parallel to the actual blockchain for markets building on the blockchain to choose from.

Wendy basically creates virtual blocks of transactions that need to be scheduled together in order to comply with the specific fairness rules in question.

Vega targets that if a transaction within a given market is sent to the network first, it is also executed first without major impacts to latency as a standard. However, the exact definition of relative fairness has a couple of subtleties.

Unfortunately, the ideal property of “If all honest parties saw transaction a before transaction b, then a is executed before b” is impossible to achieve even if only one validator is not acting honestly.

The second best approach is to guarantee that transactions a and b are at minimum settled in the same block, which is possible, but leads to deprecated performance, as there is no limit on when requests are delivered or how big a block becomes. Cornell researchers also suggest this type of fairness in a recent paper.

To resolve this challenge, Vega is proposing a definition of relative fairness around the use of local time, which can be guaranteed without deprecating performance significantly. “If there is a time t such that all honest validators saw a before t and b after t, then a must be scheduled before b”. There is no need for the local clocks to be synchronized, however the definition does make more sense in practice with roughly synced clocks (as otherwise delays happen).

For an improved trade-off, their current approach is a hybrid of block fairness and fairness around local time. Under normal operation, the protocol will guarantee block fairness. However, if a performance bottleneck is detected (through a threshold of delayed requests due to impossible fair ordering), the latter approach is utilized on a limited time-scale.

Fair, decentralized trading protocols to prevent front-running in CLOB

Different markets might require different definitions of fairness and not all transactions need to be ordered fairly against each other, so every transaction can get one or several labels in order to be scheduled accordingly. For example a market for wheat futures would not interfere with derivatives on gas fees on Ethereum.

Wendy only assures block order fairness, but a block contains enough information to also assure (some) fairness between individual transactions so that front-running and latency arbitrage becomes impossible or at least unlikely to be successful in most cases

It could be possible however, for well-resourced participants to operate clients on high speed connections at the minimum distance to the majorities of validators, which could initiate a similar arms-race as described earlier. To counter that a (random) value of 1 − 10 milliseconds to the timestamp of each received transaction, would eliminate the business case of spending shaving off nanoseconds.

Low barriers to entry & aligning incentives with LPs for more efficient markets

On Vega, being a permissionless trading protocol, anyone can launch a market with highly customizable design patterns, including frequent-batch auctions (watch co-founder Barney discuss the targeted use of auctions). The low barriers of entry coupled with the fact that market creators that will be the first committed liquidity providers reap the largest share of fee revenues should lead to healthy competition between market designs that ultimately would lead to an elimination of excessive rent seeking through extractive market practices.

The competition shifts from a timing arms-race to an arms-race in launching well-designed markets that cater to user needs in the best way possible, as LPs become market operators.

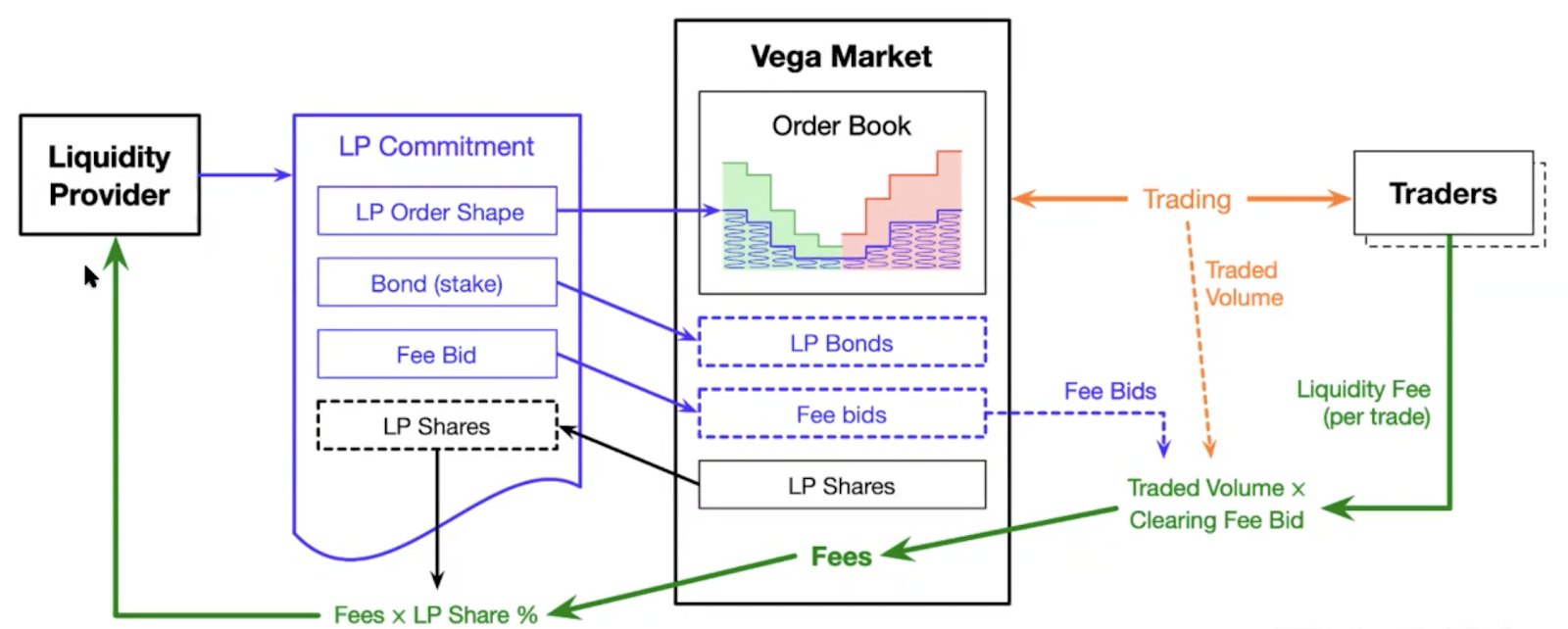

LPs need to make a financial commitment (bond) to provide liquidity at a certain level — the bond determines how much liquidity an LP provides — in order to receive rewards, while being penalized for not upholding the commitment. Vega will automatically size orders, as long as sufficient margin is provided.

Incentives to join early — a land-grab for novel derivatives markets

The more liquidity an LP provides and the earlier she joins a market, the more LP shares she receives and the higher percentage of the trading fees from price takers she receives.

Liquidity is not only desirable from a trader’s perspective, but it’s also crucial for risk-management. Distressed positions can only be liquidated if there is sufficient volume on the order book to offload it.

Thus, Vega continuously computes whether each market has a sufficient level committed, while placed into a liquidity monitoring auction, when deemed insufficiently liquid. The liquidity requirement is based on the maximum open interest observed over a rolling time window.

LP order shape

Vega enables market designs that combine the best of both worlds from constant function automated-market makers and the flexibility of manual market making that can react to the idiosyncrasies of specific conditions.

Pegged orders can be leveraged to always move relative to the currently best order on the book (e.g. order peg: bid +5bps 10%, bid + 10 40%, etc.).

Fee bid

All LPs submit fee bids, that collectively determine the clearing fee bid for all LPs (with an auction mechanism that takes into account the liquidity demand of the market with a margin of safety: lowest fee that satisfies all demand)

We at Greenfield One are extremely proud to be working with the Vega team on the cutting edge of derivatives market design in order to enable more fair and efficient access.

To learn more on Vega, check out the link-tree, follow them on Twitter and join the community (Discord / Forum / Telegram)!

Thanks to my dear colleagues Georg Reichelm, Gleb Dudka and Jendrik Poloczek for their helpful feedback!